double densed uncounthest hour of allbleakest age with a bad of wind and a barrel of rain

double densed uncounthest hour of allbleakest age with a bad of wind and a barrel of rain is an in-progress piece for resonators and brass. I’m keeping a composition log here as I work on it.

There are sure to be many detours. Getting it in shape might involve:

- more work on libpippi unit generators & integrating streams into astrid

- testing serial triggers with the solenoid tree & relay system built into the passive mixer

- finishing the passive mixer / relay system and firmware (what to do about the enclosure!?)

- general astrid debugging and quality-of-life improvements…

- composing maybe?

Sunday January 28th

Been taking some time again this weekend (while working on the new

Audiobulb tape edition!) to keep moving bits of the current

(soon-to-be-old) standalone astrid-seq,

astrid-dac and astrid-adc into library

functions in astrid.c.

The first steps of this were setting up wrappers for the jack

callback. These set up jack and take the instrument callback and wrap it

in the real jack callback. That’s a fair bit of what the current

adc and dac programs do, but the

dac program also (and this is its main job in current

astrid) has an extra thread that listens for serialized buffers to

arrive from an associated renderer program. When deserialized, these

buffers are placed into the astrid scheduler queue for playback and

mixing in the jack callback.

There’s also the astrid-seq program. This program

basically exists to schedule astrid messages.

It’s a weird situation, maybe, but I think it helped me develop each piece in isolation even if these pieces might not be used anymore, they’ll likely stick around for a while at least for development.

For example there’s a single well-known message queue for the

astrid-seq program that all instruments relay their

sequences through now. If they all embed a message sequencer, then

astrid can standardize on all messages being sent to instrument message

queues, including messages scheduled for the future.

I like this because it means astrid can just be a single instrument.

That instrument could (once these new interfaces are exposed via cython)

be a single python file run simply like

python myinstrument.py or anything that uses these

components via libpippi and astrid C interfaces directly: like the

astrid-pulsar program in progress for rain.

Currently all the pieces of astrid are orchestrated by a console script which starts the programs in the background, sends commands to instruments and etc etc. This console script can be moved into library functions mostly, too. If it’s readline-based (actually, I like antirez’s readline replacement) and astrid C implements the REPL, both programs can optionally expose a command interface, or the standalone console interface could be implemented as a new special program.

The nice thing is because these things are already set up to be

independent components with any number of instances talking to each

other (the seq program was designed to run as a single

instance, but the dac programs are meant to just be

abstract outputs and currently support multiple instances for

multichannel routing) then in their library form, these features can be

picked and chosen from for each instrument, while still only needing to

know instrument names to be able to communicate with any other

instrument. (Some kind of registry to discover running instruments would

be good, too. This could probably just be a directory filled with all

their filesystem message queue handles.)

Monday January 22nd

I have the piano roll bug again. I spent a while tonight sketching some things with the Helio piano roll editor. It’s really nice, very well done. I think I even like it more than the Reason piano roll, my old stomping grounds. Still, it makes a number of (very reasonable and fine) choices that keep me from trying to adopt it into my workflow, excited as I was to see this really nice standalone piano roll recently. It even has support for microtonal tuning, but I guess for me the problematic choices are how grids are approached, enforced and modulated.

For example, what if the pitch space of the piano roll had snap / free modes like the rhythm / tempo space does? The vertical axis would be log frequency with any arbitrary harmonic grid overlay of some scale in some tuning system… but it would be great be able to toggle snapping off and be able to draw freeform curves in frequency space, too.

From the perspective of the GUI, since I’m doing this in cairo and cairo supports svg-style drawing modes, it probably makes sense to try sampling as you draw and use those points for the svg bezier curve style where you can just keep stringing points together one after another without bothering to calculate the control points. Translating cairo curves into pippi control curves might be tricky? Whenever a curve goes through the designated render space of an event so to speak, it will need to be extracted as a vector to give the instrument script context so that the pitch curve can actually be followed.

In other words the normal piano roll drawing mode where notes are a click-drag-release to set the start and end points could have a complimentary mode which samples points in between for the curve, too.

Tempo grids should be a buffer at the top of the pianoroll that can be drawn into, too. Set descrete tempo points, or draw a curve between them.

I’d like to make snapping a property of the event/note, too. There would still be a snap mode and a free mode, but drawing some events in snap mode and then switching to free mode would preserve their snappiness. Visually, snapped notes should be called out differently than free notes. Maybe with something like a serif on the line. This way, tempo adjustments to a passage with a lot of snapped events will move the events along the snap grid if it changes. Selecting events should allow them to be toggled between snapped/quantized and free if needed, too.

If it was implemented in a way that let you convert free events into snapped events without moving them (maybe there are two actions: convert to snapped and move-quantize, or convert to snapped and preserve position) then I suppose you could lay out a number quantized snapped events and a number of unquantized free events, then convert the free events into snapped events without moving their positions, and finally adjust the underlying tempo grid to modulate it freely during those sections so that the free events also move proportionally with the tempo delta? That would be fun.

Thursday January 11th

Spending a few days working on translating the Audiobulb & Friends tape booklets into a mini website.

Wednesday January 10 2024

This is a sketch for a revised standalone version of astrid python scripts.

I’ve renamed the trigger callback to seq and added some

new trigger types. The midi trigger is broken into

cc and note, and the new serial triggers take

a single string / byte blob. I also replaced the play and

trigger methods with a single msg handler for

all astrid message types that has an interface which is just the same

string that would be used in the astrid REPL, or as an argument to the

astrid-msg program.

There’s a new patch callback that uses the new ugen

graph. Like the cython implementation, there will probably be a thread

watching source changes that reloads the instrument module, and at that

point the new graph struct could be constructed and flagged as new. Then

in the main jack callback if the new flag is set it could do an atomic

swap of the two graph structs before asking for samples from the graph

during the callback render phase.

oh…

Swapping graphs like that has the classic problem of resetting the graph state on changes. I don’t really mind that for now since I don’t do much live-coding in performance, but it would be worth looking into allowing ugens to provide a way to serialize and deserialize their params, maybe, which could be used to store the state of individual named ugen instances. Then, when creating the new graph, if those named nodes are still in the graph, their state could be restored, or really preserved through source changes.

The biggest change is the new exposed Instrument

abstraction, which was previously just an internal thing. The standalone

astrid C programs are working out, but I miss being able to compose in

python so this seems worth spending time on.

The current orc folder-full-of-instruments thing with a

single console script and implied session management is clunky. I like

the idea of astrid being library-like, too.

Self-contained astrid instrument scripts can drop themselves into the astrid REPL when run and have the same console interface available.

Instruments already expose all their messages queues in the filesystem based on the instrument name, so communication between instruments could be just a matter of knowing the instrument name. (And maybe later a network address.)

I’ve just pulled the old named pipe message queue implementation out

and settled on POSIX message queues (which are easier to use, I think)

but that also means all external communication has to happen through

astrid-msg, and that I’ve essentially dropped macos support

for now. There’s a project to bring POSIX message queues to macos which

might fix that (especially combined with cosmopolitan C) down the road,

though.

from pippi import dsp, oscs, ugens

from astrid import Instrument

def seq(ctx):

events = []

# Trigger the async play at time 0

events += [ ctx.t.msg(0, 'p sineosc freq=220 length=0.9') ]

# Send a serial message at 0.3 seconds

events += [ ctx.t.serial(0.3, '/dev/ttyACM0', 'foo') ]

# Send a MIDI CC0 changes to device 1 on channel 2

events += [ ctx.t.cc(2.2, 0, 60, device=1, channel=2) ]

events += [ ctx.t.cc(3.1, 0, 80, device=1, channel=2) ]

# Send MIDI note on/off (0.9 seconds) to device 3 on channel 0

events += [ ctx.t.note(2.2, length=0.9, freq=220, amp=0.4, device=3) ]

# Retrigger the sequence in 10 seconds

events += [ ctx.t.msg(10, 't sineosc') ]

def patch(ctx):

graph = ugens.Graph()

graph.add_node('s0', 'sine', freq=100)

graph.add_node('s1', 'sine', freq=100)

graph.add_node('m0', 'mult')

graph.connect('s0.output', 's1.freq', 1, 200)

graph.connect('s1.output', 's0.freq', 1, 200)

graph.connect('s0.freq', 's1.phase', 0, 1, 1, 200)

graph.connect('s0.output', 'm0.a')

graph.connect('s1.output', 'm0.b')

graph.connect('m0.output', 'main.output', mult=0.5)

return graph

def play(ctx):

length = ctx.p.length

freq = ctx.p.freq

# short (1-3 seconds) sine tone

out = oscs.SineOsc(freq=freq).play(length) * 0.1

# pitch modulated graincloud @ half speed

out = out.cloud(length * 2, speed=dsp.win('sine'))

# random envelope w/0.04s taper

out = out.env('rnd').taper(0.04)

yield out

if __name__ == '__main__':

with Instrument(name='sineosc') as instrument:

instrument.run_forever()Tuesday January 9th 2024

Started thinking again how to do message passing within standalone instrument scripts. Swapping a param struct with an atomic pointer, maybe.

Sunday January 7th 2024

Took some interesting first steps experimenting with opening an astrid instrument message queue in the main thread and writing param updates into shared memory space.

Decided to pause on the passive mixer and focus on the relays, motors and solenoids as percussion / activators for the moment.

Today’s real accomplishment was fixing a bunch of long-standing

warnings in astrid, though. One of those warnings was about using a

deprecated cpython API to initialize the embedded python interpreter.

The new API (PyConfig) doesn’t require explicitly setting

the python path anymore, which means I can drop the weird dance of

setting ASTRID_PYTHONPATH in the environment with the

formatted contents of your python’s sys.path and use the

default python context instead.

I spent a while thinking through approaches to feeding the wavetable stacks in the standalone pulsar program. Notes in lab journal… but the first thing to try is probably filling the adjacent table on demand based on the position of the morph param. So that as a new table is needed for interpolation, just before grab recent samples from the input ringbuffer.

Saturday January 6th 2024

Glued one of the mounts for the bass transducers to my child size violin to try running the first steps with standalone astrid programs through it. This is a test program that has 400 pulsar oscs spread across a pretty small param space. It doesn’t sound like much really, but a good first step.

Started thinking about how to break out the control interfaces (message queues, buffer queues, etc) as library features for standalone programs.

Thursday January 4th 2024

Spending some more time with the relay board, I’m suspecting I’ve got the wrong transistors. They seem like the ones on the datasheet with the same labels, but I found someone who’d got the same parts mislabeled… the proper thing to do would be to test the components and verify they do not behave like the datasheet says they should. The lazy thing to do is make a new board with known components, which is probably what I’ll actually do.

Began experimenting with standalone astrid programs that just use libpippi and a wrapper around the jack init code adapted from the dac/adc programs.

Tuesday January 2nd 2024

Tried some new relays from axman out on a new board and found a short on the existing relay board. Signals coming out of the transistors on the relay board are really weird.

Monday January 1st 2024

I felt pretty lost on this project this morning. I spent the day trying to pull all the various peices together and see if I could nail down what the rain system actually looks like – even if it’s a smaller / simpler version this time around.

- The brains will be astrid, but with some new stream-based instruments that are just small jack programs, basically. Working out a toolkit so these can be created ad hoc, and still communicate with the rest of astrid.

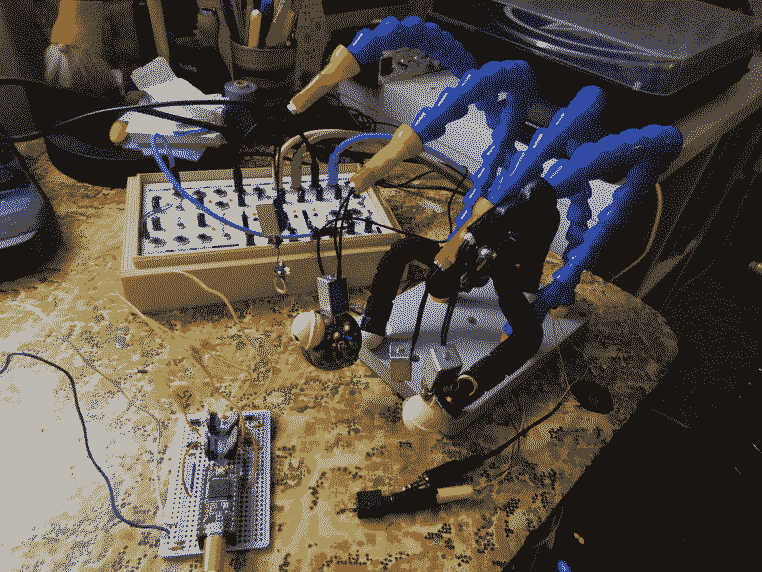

- Teensy + driver board acts as a serial trigger bridge between the laptop and four prepared solenoids (pictured, dangling from bendy tubes)

- Koma field kit is the main input mixer, including the lovelier mic, a battery-powered phantom unit for the trumpet clip mic, induction mic, two inputs from an FX send to a daisy pedal looper, all going through the nice filters and gain into a zoom interface connected to the laptop.

- The passive four channel mixer + amplifier system is the most elusive. I haven’t found a good enclosure or decided on the final affordances… was playing around with the case for an old netbook as an enclosure.

In a vague way I’m thinking of it as a glorified pedalboard for brass, though I don’t plan to play very much brass? As signals flow, microphones of different sorts go into the mixer, which goes into the computer and into the loop pedal. There’s a mic on the solenoids which are sequenced by the laptop. I really hope I can finish the passive mixer system. Wood, plastic and metal resonators in synchronized movement with the solenoid tree percussion…

Log December 2023